“Last year’s mobile data traffic was nearly twelve times the size of the entire global Internet in 2000.”

That little factoid from Cisco’s 2013 Virtual Networking Index illustrates how the business world is evolving as various wireless, fibre and satellite communications technologies are delivering faster access to businesses and households.

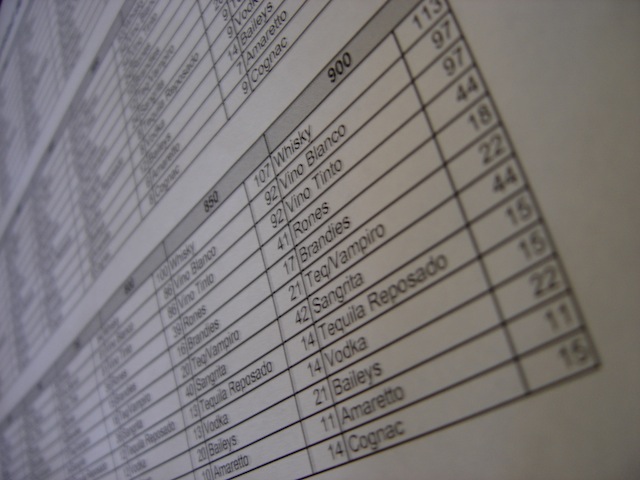

Mobile data growth isn’t slowing; Cisco estimate global mobile data traffic was estimated at 885 petabytes a month and Cisco estimate it will grow fourteen fold over the next five years.

Speaking at the Australian Cisco Live Conference, Dr. Robert Pepper, Cisco Vice President of Global Technology Policy and Kevin Bloch, Chief Techincal Officer of Cisco Australia and New Zealand, walked the local media through some of the Asia-Pacific results of Virtual Networking Index.

Dealing with these sort of data loads is going to challenge Telcos who were hit badly by the introduction of the smartphone and the demands it put on their cellphone networks.

A way to deal with the data load are heterogeneous networks, or HetNets, where phones automatically switch from the telcos’ cellphone systems to local wireless networks without the caller noticing.

The challenge with that is what’s in it for the private property owners whose networks the telcos will need to access for the HetNets to work.

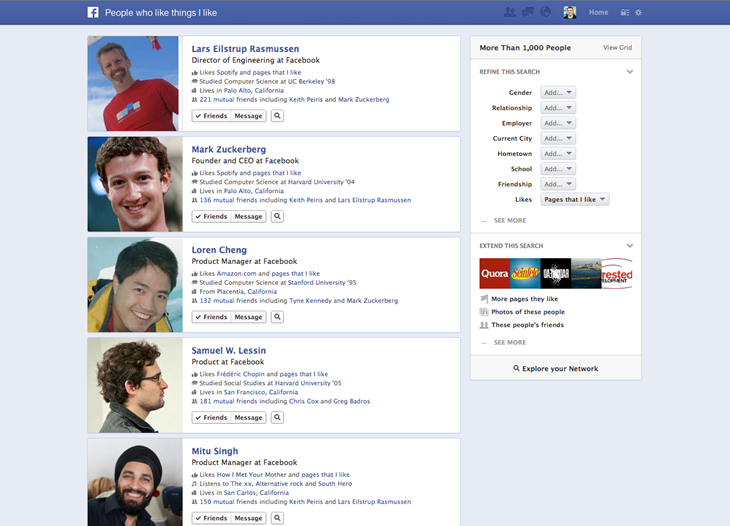

One of the solutions in Dr Pepper’s opinion is to give business owners access to the rich data the telcos will be gathering on the customers using the HetNets.

This Big Data idea ties into PayPal’s view of future commerce and shows just how powerful pulling together disparate strands of information is going to be for businesses in the near future.

But many landlords and wireless network owners are going to want more than just access to the some of the telco data — we can also be sure that the phone companies are going to be careful about what customer data they share with their partners.

It may well be that we’ll see telcos providing free high capacity fibre connections and wireless networks into shopping malls, football stadiums, hotels and other high traffic locations so they can capture high value smartphone users.

One thing is for sure and that’s fibre connections are necessary to carry the data load.

Anyone who thinks the future of broadband lies in wireless networks has to understand that the connections to the base stations doesn’t magically happen — high speed fibre is essential to carry the signals.

Getting both the fibre and the wireless base stations is going to be one of the challenges for telcos and their data hungry customers over the next decade.

Paul travelled to the Cisco Live event in Melbourne courtesy of Cisco Systems.