This is the unedited, submitted version of ‘is BlackBerry ripe for a comeback‘ that appeared in Technology Spectator on 30 July, 2014.

“What do we well?” is the question Blackberry CEO John Chen asked when he took the reigns of the Canadian communication company last November.

Chen was speaking on Tuesday at Blackberry’s Security Summit in New York where he and his executive team laid out the company’s roadmap back to profitability.

Since the arrival of the iPhone and Android smartphones, times have been tough for the once iconic business phone vendor as enterprise users deserted Blackberry’s handsets and the company struggled to find a new direction under former CEO Thorsten Heins.

Back to BlackBerry’s secure roots

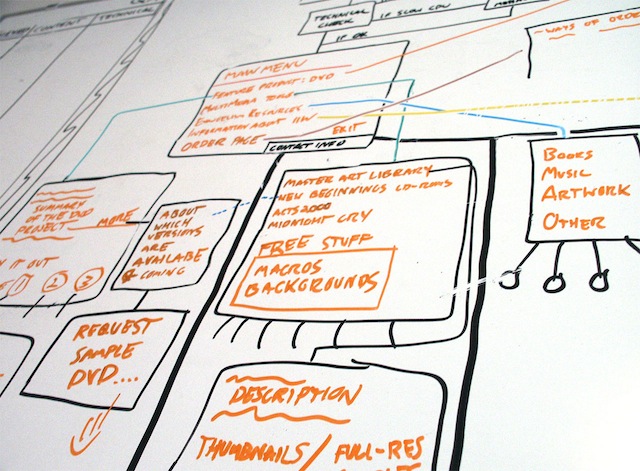

In Chen’s view, the company’s future lies in its roots of providing secure communications for large organisations, “It became obvious to us that security, productivity and collaboration have to be it.”

“This is not to say we are not interested in the consumer, but we have to anchor ourselves around the enterprise.” Chen said in a clear move distancing himself from his predecessor and products like the ill fated Blackberry Playbook

An early step in this process of focusing on enterprise security concerns is the acquisition of German voice security company Secusmart which was the cornerstone of Chen’s New York keynote.

Blackberry’s acquisition of the company is a logical move says the CEO of Secusmart, Dr Hans-Christoph Quelle, who points out the two organisations have been working closely together for several years.

“It fits perfectly,” says Quelle. “We are not strangers having worked together since 2009,” in describing how Secusmart technology has been increasingly incorporated into Blackberry’s devices.

Secusmart’s key selling point has been its adoption by NATO and European government agencies; the Snowden revelations on the US bugging of Angela Merkel coupled with the Russian FSB leaking intercepted US state department conversations along with the release of Ukrainian separatist conversations after the shooting down of MH17 has focused the European view on the security of voice communications.

Launching new services

Along with the acquisition of Secusmart, Blackberry will also be launching an new enterprise service in November, the new Passport handset in December along with a range of security applications including BlackBerry Guardian, a new service that will scan Android apps for malicious software.

Blackberry’s executives were at pains to emphasise their products aren’t focused on any single smartphone operating system and not dependent on customers buying their smartphones although to get the maximum security benefits.

“We will provide the best level of security possible to as many target devices out there as possible,” said Dan Dodge who heads Blackberry’s QNX embedded devices division.

Longer term plans

In the longer term, Blackberry sees QNX division as being one of the major drivers of future revenues as the Internet of Things is rolled out across industries.

QNX was acquired by Blackberry in 2010 to broadband the communication company’s product range, now it is one of the pillars of the organisation’s future as Chen and his team see that connected devices will need secure and reliable software.

Dodge says: “With the internet of things, you can have devices that can change your world.”

While QNX is best known for its smartcar operating system – it underpins Apple’s CarPlay system being rolled out for BMW as well as its own system deployed in Audis – the company’s products are used for industrial applications ranging from wind turbines to manufacturing plants.

Despite Blackberry’s announcements in New York, the company still facing challenges in the marketplace with the Ford Motor Company announcing earlier this week it will drop the Blackberry for its employees by the end of the year and replace them with iPhones.

Chen’s though is dismissive about Apple’s and IBM’s moves into Blackberry’s enterprise markets, “what we do and what they do is completely different.”

Focusing BlackBerry

The focus for Chen is to differentiate Blackberry and play on its strengths, particularly the four markets it calls ‘regulated industries’ – government, health care, financial and energy that the company claims makes up half of enterprise IT spending.

Whether this is enough to bring Blackberry back on track remains to be seen but Chen says this is where he sees the company’s future, “This is why we are so focused on enterprise and so focused on these pillars.”

For Blackberry, the emphasis on enterprise communications is a step back to the profitable past. It may well be successful as businesses become more security conscious in a post-Snowden world.

Paul travelled to the Blackberry Security Summit in New York as a guest of the company.