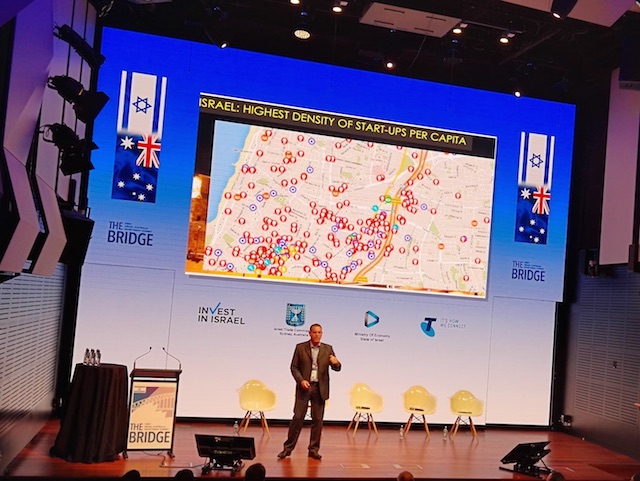

Things are going crazy in the Israeli startup scene as investors and multinationals and startup pile into the country’s tech sector.

In order to understand what’s happening I spent the morning at The Bridge, an Israel Australia Investment Summit staged by the Israeli Trade Commission and Invest in Israel.

Of the morning sessions, the two panel segments gave the most insight into what’s driving the Israeli tech sector with Nimrod Kolovski of Jerusalem Venture Partners emphasising the industry-g0vernment-academia collaboration, military spending and tight personal networks.

“In Israel we can make two phone calls – to someone who was with them in the army and to someone who they worked with at the last company. You don’t get a chance to repair your reputation in Israel,” says Kolovski of those tight personal networks.

Kolovski also highlighted an important part of venture capital culture – just as much in the US as Israel – is the willingness to admit failure, “if you don’t then you’ll lose credibility”.

The broad message from the morning’s sessions is that the Israeli tech sector happens to have the combination of factors that aligns with the Silicon Valley and US corporate view of the world coupled with a strong underpinning of high level, defense led research and personal networks forged to a large degree during National Service.

For a long time I’ve been skeptical of the Israeli and Silicon Valley model being replicable in other countries, particularly Australia, and the morning’s sessions only confirm that view. There is more to this which I intend to explore in some future blog posts.

The lesson for other countries though is that personal networks, research and access to capital matter in creating new industry hubs. The challenge for each country or region is to find the combination that plays to their society’s and industry’s strength.

For Israel, it’s hard to see how their tech sector isn’t going to continue to thrive in the current climate however it’s the result of long term focused investments, research and policies. Taking the long view is probably the most important lesson of all.